Curbing Spend on Irrelevant Search Terms using NLP and Statistics

Megha Natarajan, February 2, 2022

In order to maximize one’s advertising reach on Amazon, it is very important to consistently research and identify new keywords that divert traffic to your product. Trends and consumer behavior change and we must also keep up with them. However, researching keywords can eat away your budget and cause your advertising efficiency to decrease.

One good practice for finding new keywords is to use Auto campaigns and BROAD match keywords and analyze search terms based on advertising KPIs. Once we identify a good performing keyword, we start targeting that keyword more efficiently by optimizing bids on them

Although this process is the best way to stay on top of new trends, using these strategies can result in spending on irrelevant Search Terms that don’t always convert.

Why focus on search terms and not keywords?

A keyword is a biddable entity - for example ‘dog chew’

A search term is what the customer searches for in the search bar

If we bid on the keyword ‘dog chew’ with match type as ‘BROAD’, then we will be bidding on all search terms that contain the variations of the words ‘dog’ and ‘chew’. Eg. ‘dog allergy chews’

![dog chew]()

A good keyword might still be an excellent match to the product while harboring multitudes of search terms that are in no way related to the product.

How does spending occur on Irrelevant search terms?

Broad and Auto campaigns, while great to identify new good performing keywords to add for optimization, also take under their bracket search terms that waste spending mainly due to being irrelevant to the campaign

Here is an example:

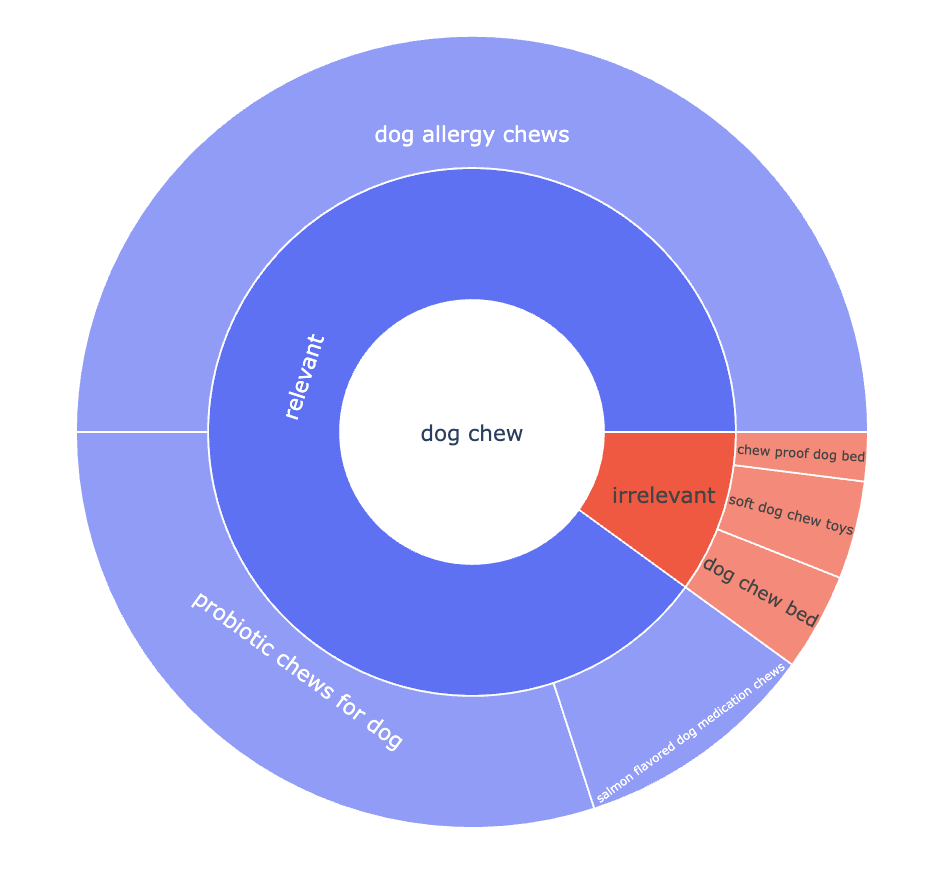

Product: Seasonal dog allergy chews in Salmon flavor

These contain probiotics but no vitamins. They are not organic.

One relevant keyword for this product is “dog chew”. So, to capture relevant search terms and to harvest more good performing keywords we bid on ‘dog chew’ as a BROAD keyword.

This gives us access to potential good search terms:

dog allergy chews

probiotic chews for dog

salmon flavored dog medication chews

However, ‘dog chew’ also covers some irrelevant search terms:

dog chew bed

soft dog chew toys

chew proof dog bed

Someone looking for a bed or toy for dogs is not likely to purchase a seasonal dog allergy chew tablet.

The size of irrelevant and relevant spend is an approximation of how much irrelevant spend some of the campaigns can have

In addition to BROAD match keywords, AUTO campaigns also end up spending hugely on search terms that are not relevant to the product.

Some ways to identify the search terms that waste spend

1. Filter based on KPIs like click-through rate, conversion rate, the ACOS (average cost of sale), etc.

A common simple way is to look at search terms and identify poor-performing search terms in terms of click-through rate, conversion rate, etc.

However, there are several drawbacks to this method:

Some search terms might not show good performance yet but might be relevant to the campaign. These might have eventually ended up being good

Looking at the performance at the search term level is tedious and manual

We tend to miss out on a huge chunk of search terms that are irrelevant to the campaign and cannot easily be weeded out purely by having a performance filter on search terms

2. Automated Unsupervised Machine Learning

We tried many approaches.

One of them was to use automated unsupervised learning with relevant KPIs.

We cluster search terms and identify clusters of interest. This gave us decent results. It captured the terms that naive filtering would capture and caught many more search terms that naive filtering wasn’t able to capture. However, it also sometimes caught terms that are poor performing but not necessarily irrelevant to the product. It also missed out on many irrelevant search terms that the product had spent on.

We felt we could do better.

Our mission was to identify all irrelevant search terms and not just the search terms that are not performing well

NLP, Tokenization, and Statistical Modeling to identify irrelevant search terms

The approach that worked best for us was Tokenization.

We utilize Natural Language Processing including Tokenization to identify word tokens irrelevant to the products in our campaign.

We Further strengthen our prediction by Statistically modeling thresholds on several KPIs including cost per conversion, clicks, conversions, among others. We also factor in the campaign’s target ACOS (Average Cost of Sale).

In our most recent test, 98% of the word tokens identified by this model as irrelevant were indeed irrelevant to the mapped campaigns in the account.

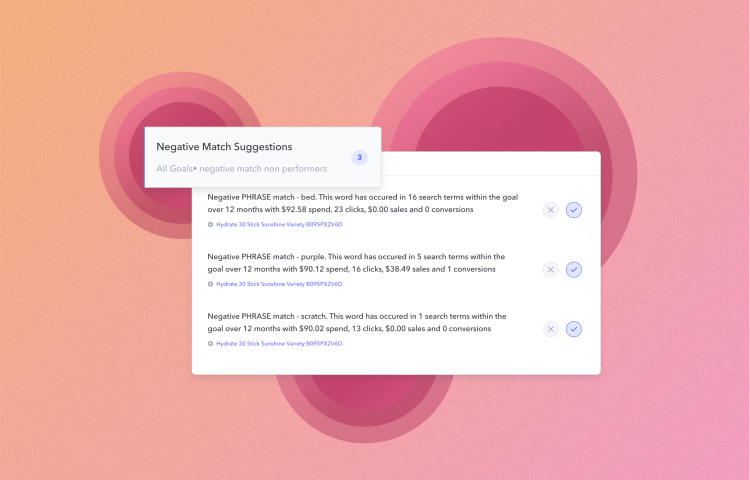

This gives us the ability to do two things -

Negative PHRASE match the irrelevant word token so that any other search term containing the word token does not end up wasting spend

Negative EXACT match the search terms containing the irrelevant word token

Our implementation of irrelevant ad spend identification is helping our clients reduce tedious hours manually looking for search terms that waste ad spend and helping improve ROAS (Return on Ad Spend) on their campaigns.

Using Dask dataframes and Managed Notebooks on GCP we are able to run this computationally intensive model at our desired frequency.

This was a very fascinating project for our team to work on because of how broad the scope Wasted Ad Spend is and how insightful it is to hone in on irrelevancy which can be extremely tricky.

If you feel your organization is not maximizing the potential of your budget, reach out to us at hello@perpetua.io and we'd happy to help.

To get started or learn more about how Perpetua can help you scale your Amazon Advertising business, contact us at hello@perpetua.io

Top Stories